Digital Domain Unveils Masquerade3

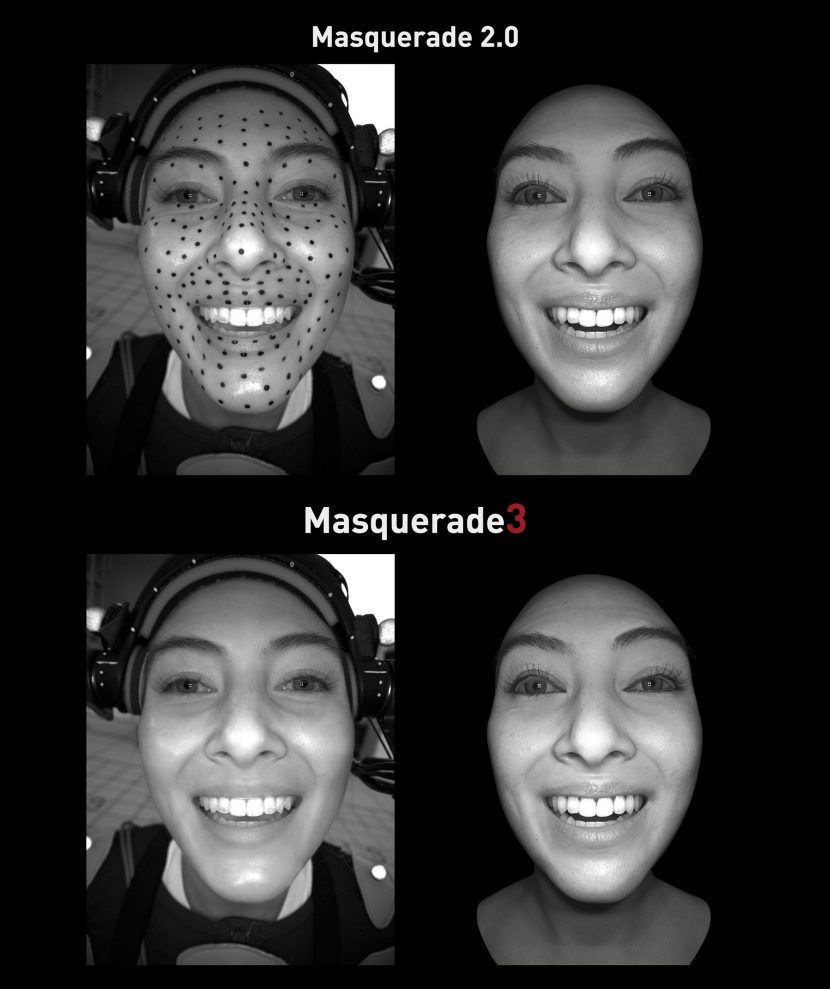

05.18.24Digital Domain has launched its latest advancement in facial motion capture systems called “Masquerade3.” The new Masquerade3 offers even higher quality but with a new markerless facial capture pipeline. The new system builds upon the success of its predecessor, Masquerade 2.0, which brought the intricacies of human facial dynamics to digital characters and delivered more emotive performances to their projects. Masquerade 2.0 was released in late 2020 and was used extensively on projects such as SheHulk.

Masquerade 2.0 required tracking dots but was used very successfully on shows such as She Hulk.

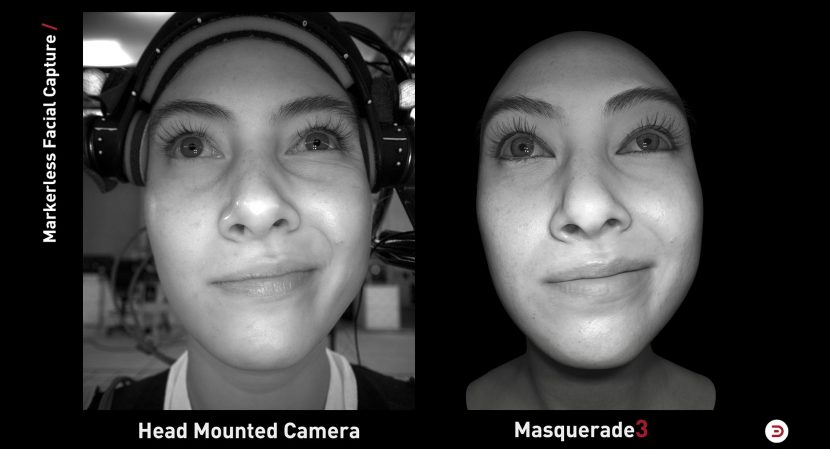

By eliminating the need for markers on performers’ faces, Masquerade3 provides greater efficiency on set, reduced stress on the actors and broader creativity. Masquerade3 is a versatile solution that can accommodate a wide range of project requirements and budgets. As a result, it can significantly reduce integration and rigging costs per shot. Moreover, it minimises the time actors spend on makeup, enabling them to focus more on their performances and on-set calibration. By offering faster capture-to-reconstruction times, Masquerade3 can help productions achieve results more quickly, streamline workflow, and reduce delays.

Perhaps one of Masquerade3’s most significant benefits is its markerless capture technology. This product streamlines the production process by eliminating the need for markers, saving time for talent, director, and on-set crew. Masquerade3 delivers enhanced stability compared to its predecessor, reducing the need for manual iterations and producing more reliable results straight out of the box. With integrated gaze estimation, Masquerade3 reduces labour costs in animation, VFX and processing, providing a more efficient solution for reconstructing whole performances.

According to Daniel Seah, CEO of Digital Domain, it is adaptable and a “revolutionary advancement in motion capture technology. The markerless capture feature, enhanced stability, and unmatched flexibility make it easier for filmmakers to bring their creative visions to life with more efficiency and effectiveness than ever before”.

Mike Seymour, Hanno Basse, Dan Ring and Issac Bratzel at FMX

fxguide previously covered Masquerade 2.0, which itself was a semi-automatic marker system with advanced machine learning that was 10x times faster than DD’s previous version and, at the time, introduced both eye gaze and eyelid tracking. Please take a look at our story here.

Naturally, as a result, we were keen to speak with Digital Domain’s CTO Hanno Basse at this year’s FMX conference about the new release. At FMX, Hanno joined fxguide’s Mike Seymour in presenting on digital humans. Hanno and Mike were also on a digital human panel with Dan Ring from Choas and Issac Bratzel of AvatarOS.

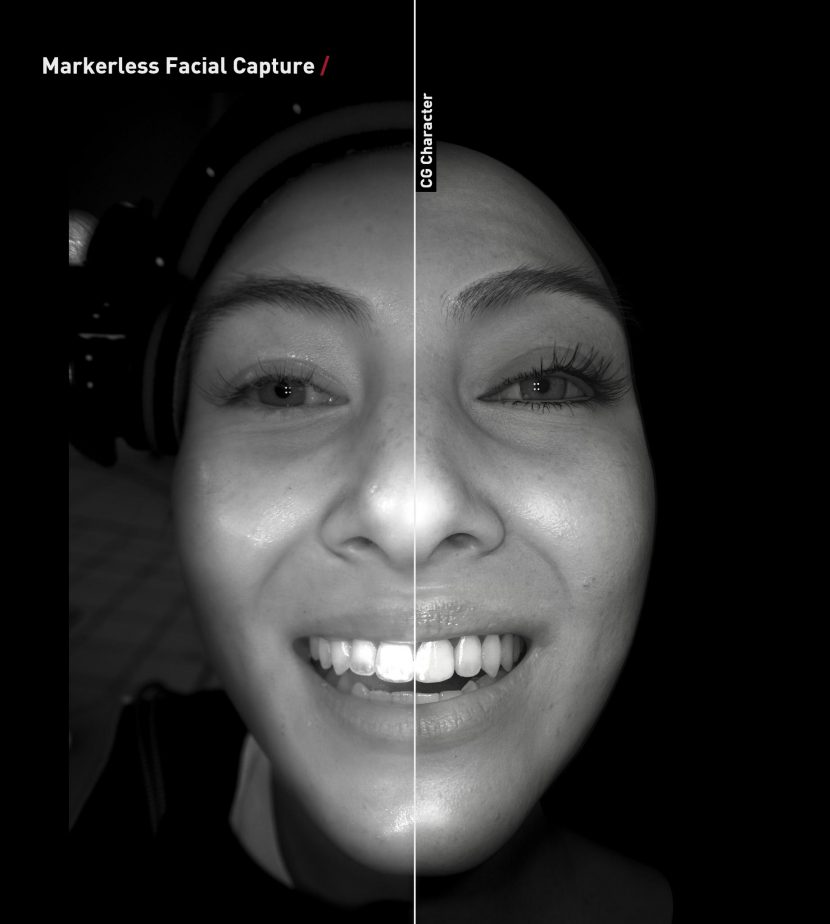

At its core, Masquerade3 is the same conceptual approach that Digital Domain used with Masquerade 1.0 to create Thanos in Avengers: Infinity War. But successive generations of machine learning have refined and automated the process to produce a quicker system with more fidelity to the original actor’s subtle facial performances and acting choices. For example, Masquerade3 is more robust since an inherent limitation of a marker system is how consistent those markers are from one set-up to the next.

Masquerade 2 had to assume the dots were precisely in the same position on the face, where Masquerade3 just relies on the actors actual face. No matter how committed a makeup team was on set, relying on accurate facial markers introduced their own noise to the process. Additionally, variations in the exact angle that the head-mounted camera rig sat on the actor’s head would often require compensation, in Masquerade3 that is a completely solved for a non-issue.

The system’s accuracy has been measured as little as 0.5mm compared to ground truth. While Masquerade 2 could also be highly accurate, the amount of manual work and adjustment to reach such a level of accuracy was much more significant. “We think it looks very convincing,” says Hanno Basse. “It is easier on the production crew, it is easier on the performer, and the workflow in post-production is a lot faster. It is all automated now, in the past cleaning up the markers could be terrible, and we just dont have to do any of that anymore, we get the plate data in, we run the model, and we get the CG out.” There is still work done on a Masquerade3 capture, for example teeth and tongues are all added later, the hair groom etc, but overall the process is just much cleaner and effective.

The workflow is

- Initial identity capture (1 day): Capturing a performer’s ROM, visemes, and a 4D scan to create the high-resolution facial geometry. This one-off day actually uses Masquerade 2.0 – with markers on the face of the actor – to create a ground truth training data set. Markers are not used again after this.

- Training and/or fine-tuning of performer-specific model with that data.

- Ingest markerless plate photography from on-set, then infer the high-res model from this.

As the process is quite forgiving, for background characters, a lower quality but still useable facial animation sequence can be created even without the specific identity capture of that actor. In effect, transferring from a library detailed dataset solution to a new background character can provide enough to populate crowd scenes, etc, without bespoke models. "A single performer could provide a range of expressions," explains Hanno Basse. "And then they can drive many different agents in a crowd, and the more identities we do and capture – the more performances we have in our library – the easier this will get. It is going to be really interesting to see how this develops in the future."